Effortlessly manage, experiment, and monitor your LLM prompts.

Stop waiting on engineering to update prompts and guessing their impact.

Start empowering the whole team to manage, monitor and optimize their LLM prompts.

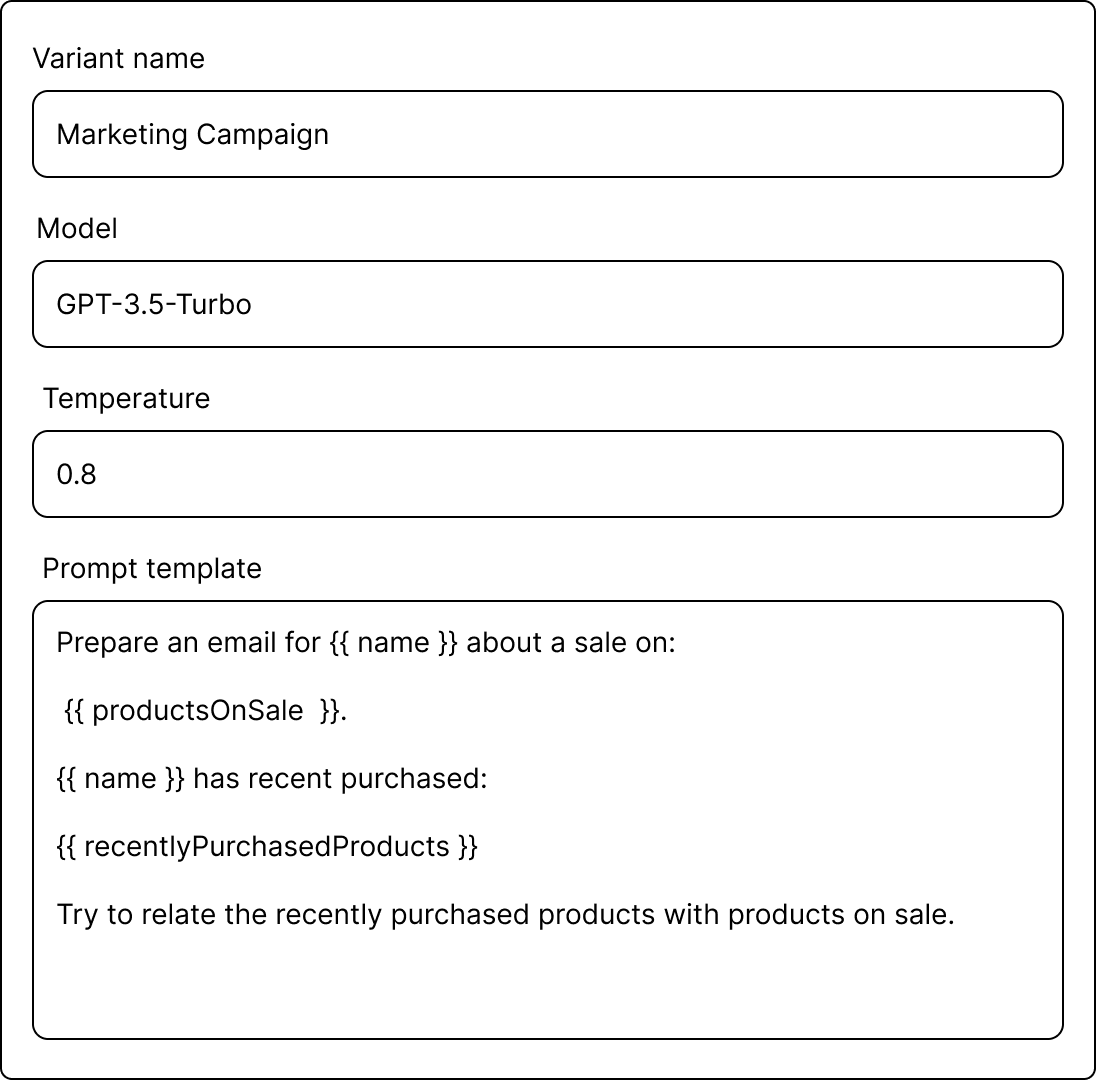

Manage all your prompts within a single dashboard

Configure prompts with different models (e.g., GPT-3.5, GPT-4), temperatures, and text templates. Use template variables to insert dynamic data into individual prompts.

Get started now

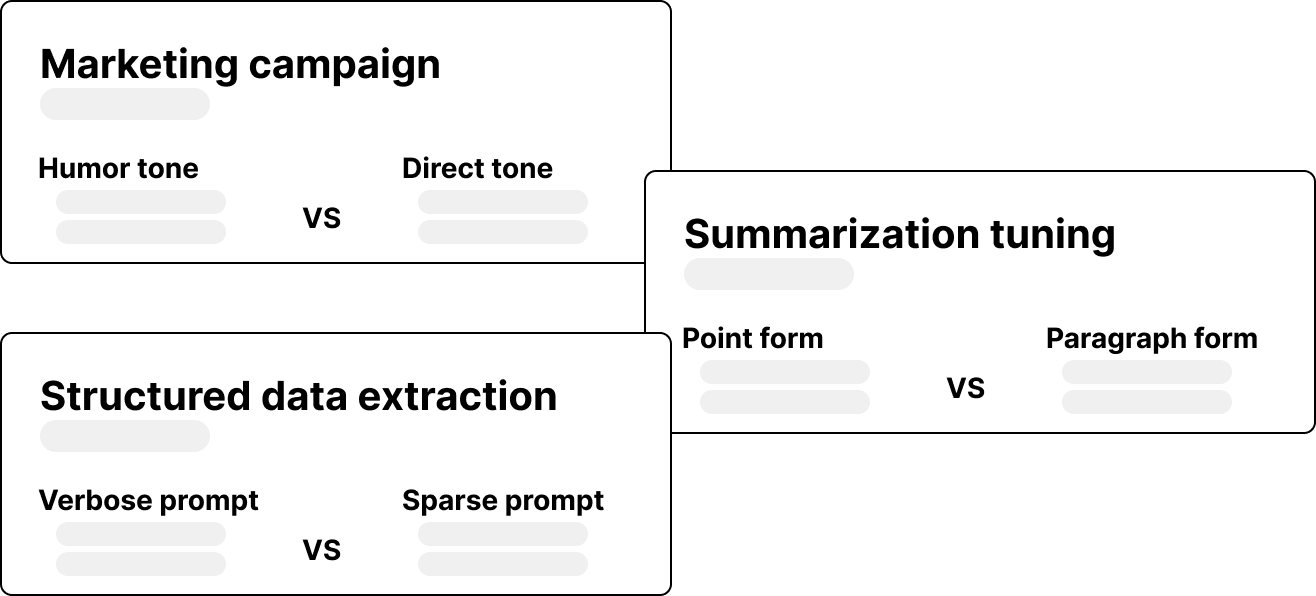

Run experiments to optimize your prompts

Test different prompt configurations side by side in real-time experiments. Assign weights to control how often each variant is chosen. Observe and analyze how each variant performs over time.

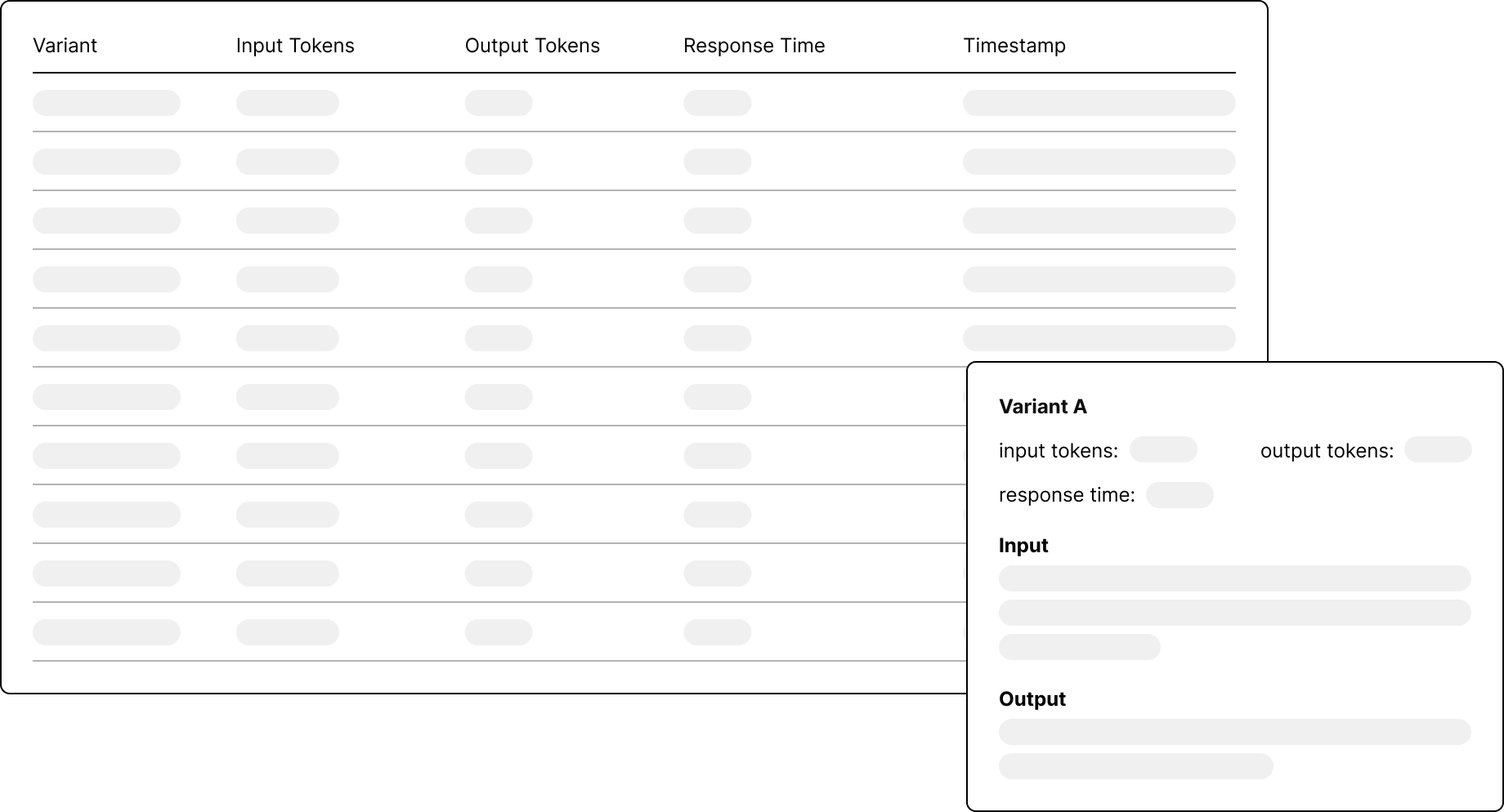

Begin optimizingMonitor prompt performance in real-time

Track how your prompts are performing with real-time metrics. View token usage, response times, and other key indicators. Review both the input prompt text and the generated output from the LLM. Make informed decisions to fine-tune your prompt setups.

Start monitoring your prompts

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19fetch( "https://api.abprompt.dev/experiments/<your-experiment-id>", { method: "POST", headers: { "Authorization": "Bearer <your-api-key>", "Content-Type": "application/json", }, body: JSON.stringify({ openAIAPIKey: '<your-openai-api-key>', templateVars: { /* key value pairs for variable interpolation within your prompt template. */ }, metadata: { /* optional key value pairs for attaching metadata to your experiment run. You'll be able to reference the metadata within the dashboard. */ } }) } );

Frictionless integration

Configure an experiment in minutes. Then make API calls to a single, minimal, endpoint.

Pricing

Start with AB Prompt's Basic plan. Upgrade to Paid for unlimited access.

Get in touch

Feedback, suggestions, concerns? We're eager to hear it all!