Experiments

AB Prompt enables you to set up two prompt configurations and randomly select one based on assigned weights when make an API call. This is useful for running experiments to compare the effectiveness of different LLM models, test various tones and styles, or even measure the impact of prompt phrasing on performance.

How it works

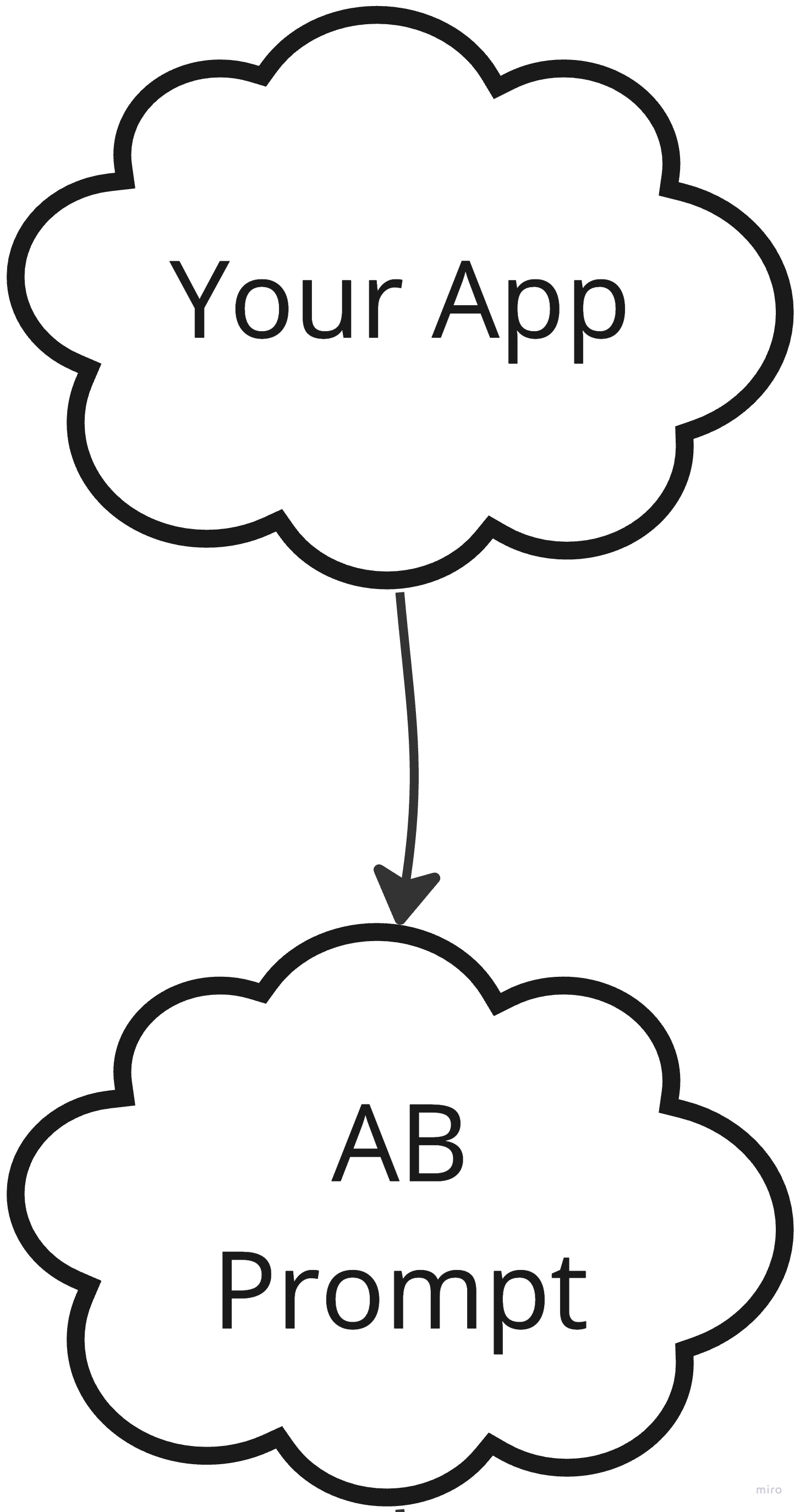

Initiating the Call

Each experiment gets its own unique API endpoint. Your application's server sends a

POSTrequest to that endpoint, passing along API keys and data needed for rendering your prompt template.Weight-Based Variant Selection

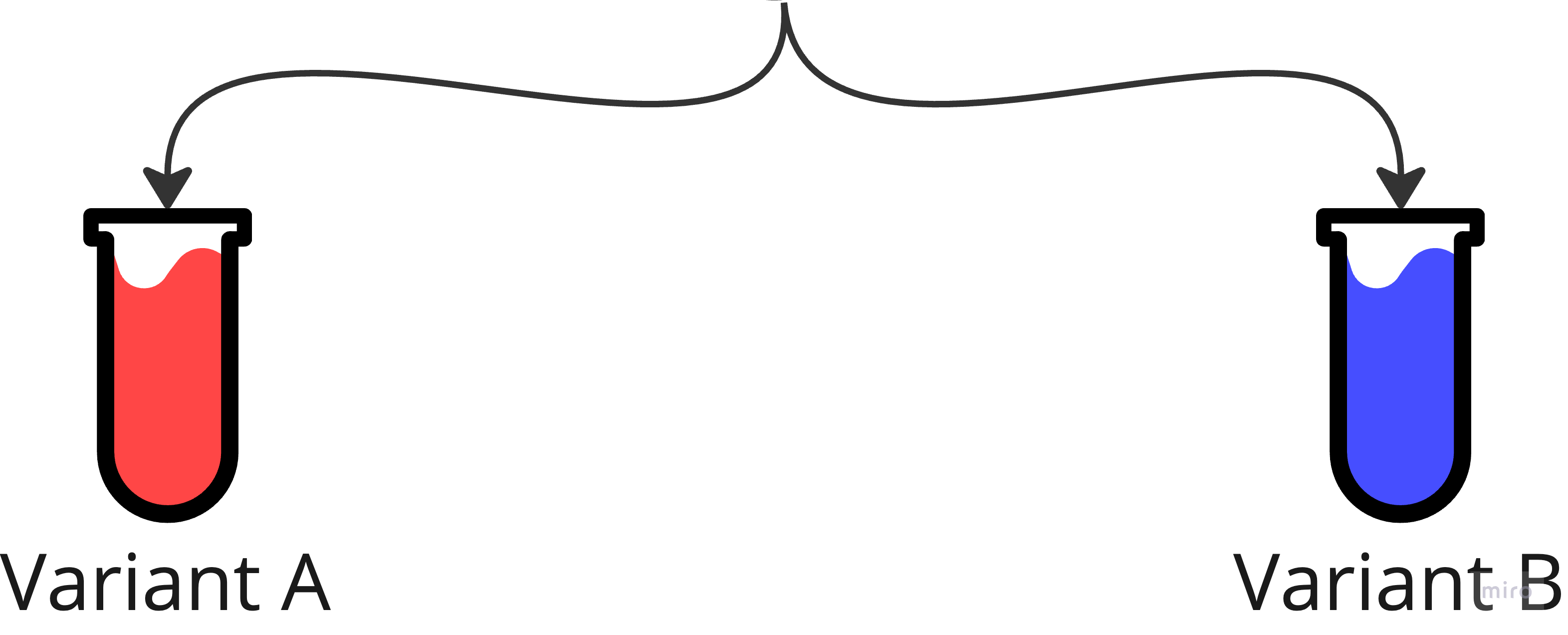

AB Prompt uses configured weights to make a probabilistic decision on which variant to select. This system ensures different requests may receive different responses based on varied configurations, enabling effective AB testing.

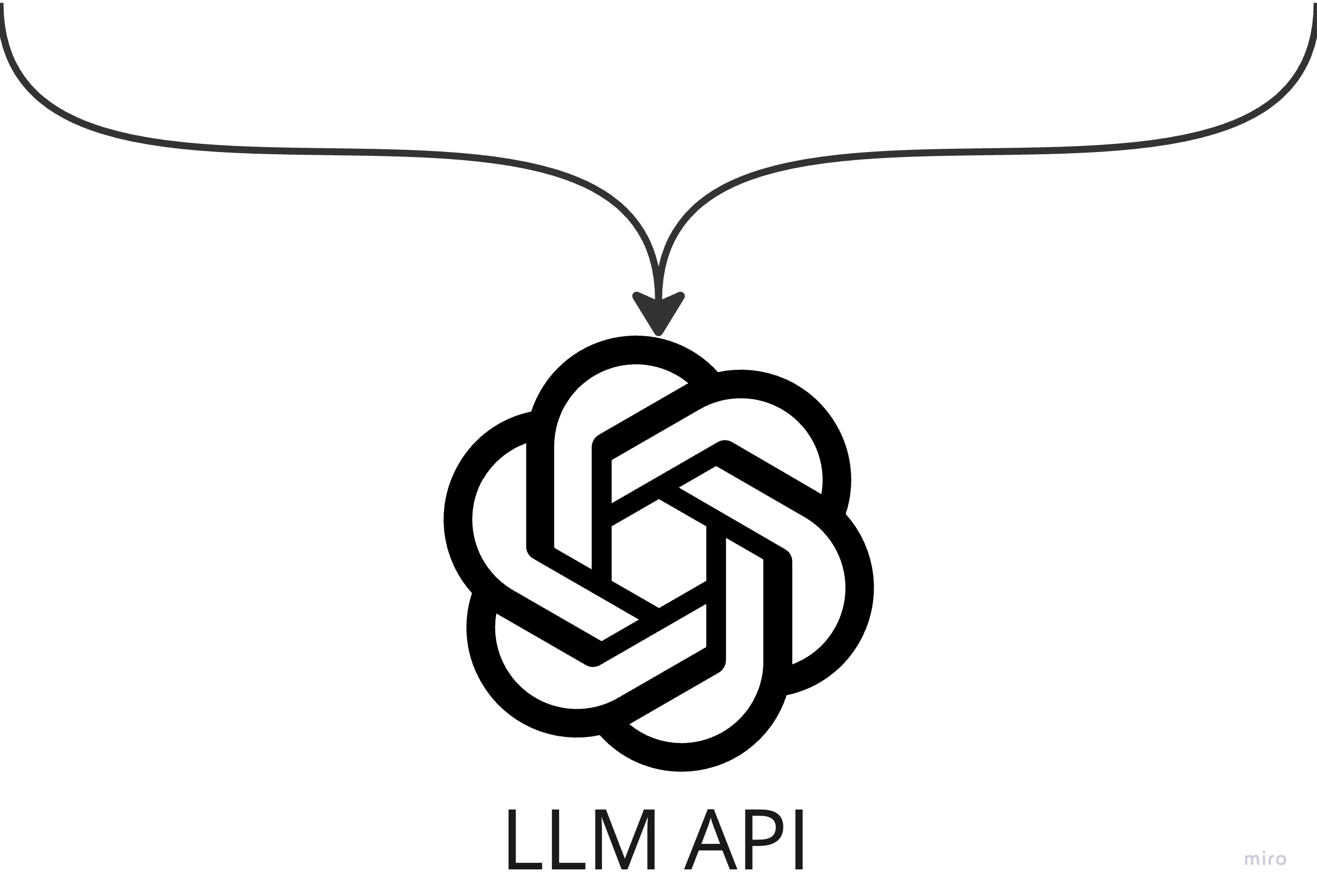

Underlying Model Interaction

AB Prompt then executes the underlying Language Model (LLM) API. Upon receiving a response, AB Prompt logs the results and passes back data to your application's server.